In popular apps like Facebook, you may notice that while uploading any photo post, it automatically detects human faces. Everyone now knows that the face detection feature has become the hour of need for every Photo Sharing app.

With the face detection iOS API, you can detect not only faces, but mood through smile or blink, etc. The face detection feature was introduced by iOS in iOS 5 devices, but many users failed to take a note of it.

Here I am creating a sample project on Facial Detection using Core Image framework. It's very interesting to know that how easy it is to implement.

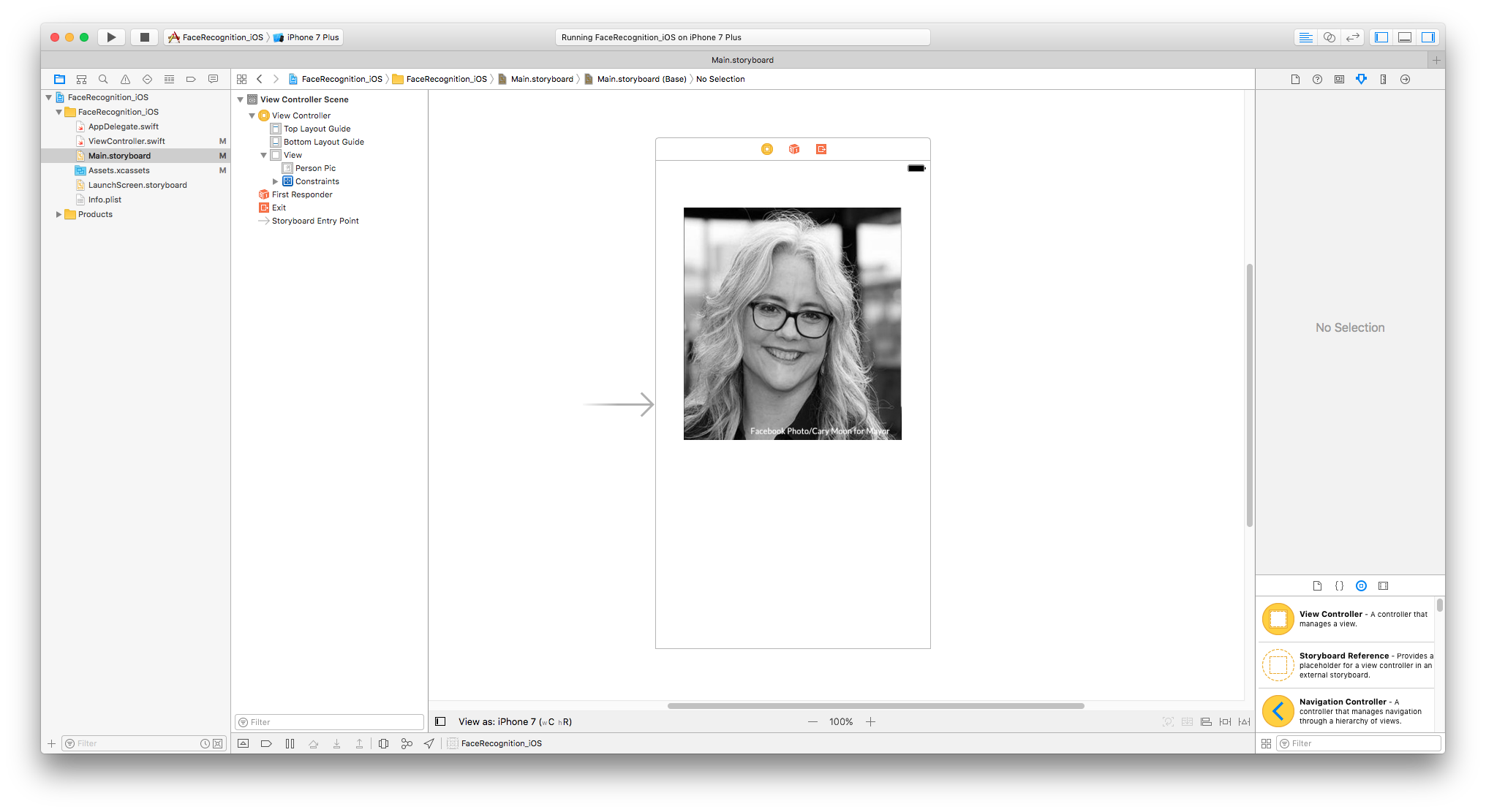

In storyboard drag an UIImageView on viewcontroller xib. Add any photo that has human faces.

After adding photos to UIImageView create IBOutlet of that UIImageView in the viewcontroller class.

@IBOutlet weak var collagePic: UIImageView!

Now I am writing down a simple function to detect faces from the image. you just have to pass UIImageView as a parameter to this function. I will use Core Image framework and it's methods to achieve this goal. :)

func detect(personciImageV : UIImageView) {

guard let personciImage = CIImage(image: personciImageV.image!) else {

return

}

let accuracy = [CIDetectorAccuracy: CIDetectorAccuracyHigh]

let faceDetector = CIDetector(ofType: CIDetectorTypeFace, context: nil, options: accuracy)

let faces = faceDetector?.features(in: personciImage)

// For converting the Core Image Coordinates to UIView Coordinates

let ciImageSize = personciImage.extent.size

var transform = CGAffineTransform(scaleX: 1, y: -1)

transform = transform.translatedBy(x: 0, y: -ciImageSize.height)

for face in faces as! [CIFaceFeature] {

print("Found bounds are \(face.bounds)")

// Apply the transform to convert the coordinates

var faceViewBounds = face.bounds.applying(transform)

// Calculate the actual position and size of the rectangle in the image view

let viewSize = personciImageV.bounds.size

let scale = min(viewSize.width / ciImageSize.width,

viewSize.height / ciImageSize.height)

let offsetX = (viewSize.width - ciImageSize.width * scale) / 2

let offsetY = (viewSize.height - ciImageSize.height * scale) / 2

faceViewBounds = faceViewBounds.applying(CGAffineTransform(scaleX: scale, y: scale))

faceViewBounds.origin.x += offsetX

faceViewBounds.origin.y += offsetY

let faceBox = UIView(frame: faceViewBounds)

faceBox.layer.borderWidth = 3

faceBox.layer.borderColor = UIColor.red.cgColor

faceBox.backgroundColor = UIColor.clear

personciImageV.addSubview(faceBox)

if face.hasLeftEyePosition {

print("Left eye bounds are \(face.leftEyePosition)")

}

if face.hasRightEyePosition {

print("Right eye bounds are \(face.rightEyePosition)")

}

}

}

Now call the above method from viewDidLoad() method and don't forget to pass the UIImageView object. Here is the example.

detect(personciImageV: self.collagePic)

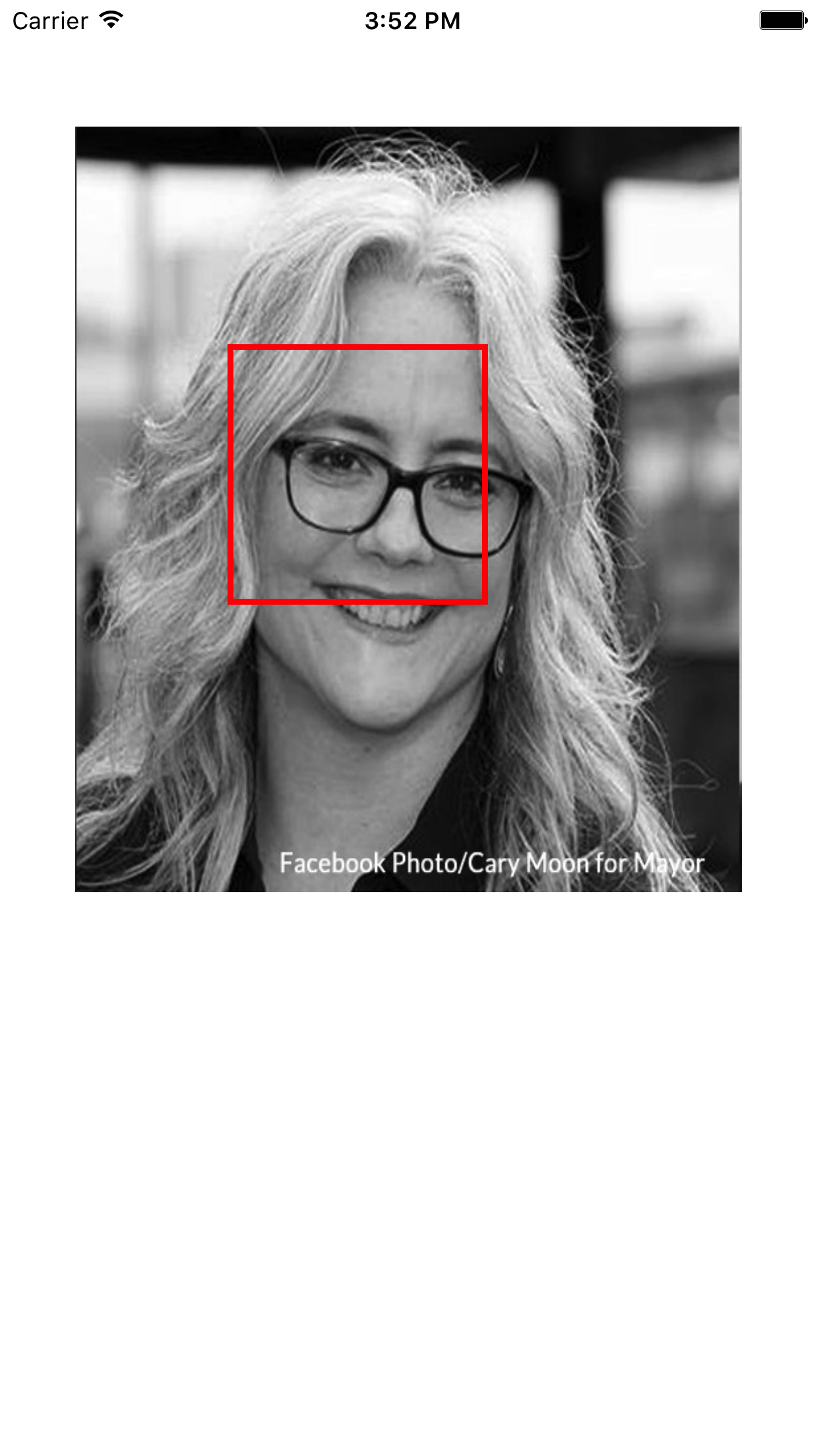

The Output will be like this:

Well, this was fun. Isn’t it? Please feel free to share your thoughts and questions in the comment section below.

Happy Coding !!!

0 Comment(s)